Usability Engineering

Usability testing

Usability testing is the 'gold-standard' usability engineering methodology. In a usability test, actual system users are audio and video recorded 'Thinking Aloud' (verbalizing their thoughts) as they perform representative system tasks. Annotated transcripts of the test subject's audio and video recordings are then created using a validated usability coding scheme. Once this annotated transcript is created, the test results can then be analyzed to create both quantitative and qualitative usability results.

Notes

Strengths and weaknesses

Strengths

- Low cost - the software and equipment necessary for conducting a usability test are now free to low-cost. Depending on the cost of the hired user participants, usability tests can be reasonably inexpensive (relative to the quality of results that they return)

- Usability testing results are representative of real system users - usability testing is the best methodology for returning high quality, representative usability results

- Few required experts needed to run the tests - only two usability experts are needed to conduct a usability test

- Portable - current portable, low-cost technology (i.e. laptop computers, portable digital video cameras, etc.) enables usability tests to be conducted whenever, wherever

Weaknesses

- Doesn't systematically create improvement strategies - the outcome of a usability test is a list of found usability problems for a given system. This doesn't, however, provide much guidance to the system's developers towards how to fix these found issues.

- Can be construed as 'odd' for the users to 'Think Aloud' - some users may find Thinking Aloud to be difficult or uncomfortable, which can possibly affect the results of the study

- More resource intensive than usability inspection methods - it takes more time, money, and people to conduct a usability test than it does to conduct a usability inspection (i.e. Heuristic Evaluation or Cognitive Walkthrough)

Plan

Sample Selection

Sample Selection

How many subjects should be selected for the study?Past studies have indicated that a sample of 8-10 subjects can accurately identify up to 80% of an information system's usability problems (Nielsen, 1993), (Rubin, 1994). For studies involving inferential statistics, a study's subject base should increase to 15-20 subjects per group.

How should the subjects be selected?In order to acquire a full range of representative users, it may be necessary to pre-screen the selection of the users based on the user profile set (created in phase two of the Rapid Response study design). Ideally, the subjects should be selected such that at least one of every unique user type is selected for the study (with proportionally more users selected from the more prominent user groups). Unique end users will generate unique usability issues; therefore, to capture such issues, all unique user types should be included in the study.

How should the test subjects be divided during the evaluation?Usability testing can be performed using either a within-group or a between-group study design. A within-group study design occurs when a single group is formed from the entire study sample, and all subjects within that single group are subjected to the same interventions (i.e. the use of a new Electronic Health Record system). A between-group study, on the other hand, occurs when two or more groups are formed from the study sample, and each group is subjected to different interventions (i.e. group #1 uses system X during the test and group #2 the uses system Y or no system at all). For both within-group and between-group designs, multiple measurements can be taken over time, which provides the opportunity to compare the evaluation results.

Choosing which study design to use for an evaluation depends on the overall evaluation objective. For example, if the objective of the study was to evaluate the usability of several different prototype systems, a within-group study could suffice. In such a study, all members of the sample group would test each of the prototype systems. A different study objective could be to evaluate and compare the differences in a single system's use by multiple professional types. For such a study, a between-group study design could be chosen, where the subjects, who are divided by professional type (i.e. nurses and physicians), test various tasks on the same system. Such between-group study designs allow for comparisons to be made between the distinct subject groups (and the systems they use). The groups in the between-group study design can be formed in any number of ways, including, but not limited to any combination of the previously derived user profiles (i.e. dividing the groups by technology expertise levels).

Summary of the sample selection process of study design

Summary of the sample selection process of study design

The tasks required to complete the sample selection phase are as follows:

- Choose the number of test subjects.

- A testing group of 8-10 subjects can capture 80% of a system's usability issues; however, 15-20 subjects are needed to conduct inferential statistics.

- Select the test subjects.

- The selected test subjects should be representative of the end-system user population (as defined in the user profile set).

- There should be at least one representative user from each user profile, and proportionally more users from the more prominent user groups.

- Create the study sample groups.

- Within-group or between-group study designs can be chosen (the choice is dependent on the objective of the study.

Selection of representative experimental tasks and contexts.

Selection of representative experimental tasks and contexts

When should experimental tasks and contexts be generated?The need for the creation of experimental tasks and contexts is dependent on the chosen range of experimental control. Naturalistic studies, for instance, have high levels of ecological validity (through the use of real subjects in contextual situation), but sacrifice experimental control in doing so. In such naturalistic studies, direct observations are recorded in the test subject's place of work, as the subject puts the system to regular use. This removes the need for the test designers to create experimental tasks and contexts, as the tasks occur in context naturally.

Laboratory-based evaluations, on the other hand, involve controlled experimental conditions where experimental tasks and contexts often have to be developed as written medical case descriptions by the system evaluators.

How can experimental tasks and contexts be generated?The development of experimental test cases requires careful design. A test case that isn't representative of a real-life situation would produce irrelevant observational data, for the user would be performing tasks that would not naturally occur in their place of work. To prevent this, the experimental cases need to be close approximations to the test subjects' natural work processes. Contextual cases can be developed using a variety of methods. In a medical context, the cases could be adopted from medical training cases (such as the exercises published in the New England Journal of Medicine), or they could be custom created (with the aid of medical experts) to meet the needs of a given study based on real health data.

Cases can also be developed through the utilization of workflow analysis results gathered in phase 3 of the RR study design. For example, if the system under evaluation was a new electronic hospital triage system, to be used by the hospital's nurses as a replacement for their previous paper-based system, in the workflow analysis, the analyst/s would have observed the nurses use the current paper-based triage system at the hospital, taking notes on such things as what data is recorded in the system and in what order. From those task notes, realistic test case scenarios could then be written for the usability testing of the new triage system.

The format that the final test case scenarios take can vary significantly. One case study format could be a simple, free text description of a hypothetical case, whereby the subject would act out their normal duties after understanding the requirements posed in the textual description. Another, more elaborate test case scenario could involve a script, where subjects are given characters and roles, and must act out their parts accordingly (i.e. a simulated patient-doctor interview could be played out with two subjects, one playing the patient, who is given the duty of providing the doctor with detailed information about their reason for attending the clinic, and the second subject playing the doctor, who must treat the patient as a normal doctor would using the system under study) (Kushniruk, Kaufman, Patel, Levesque, & Lottin, 1996).

Summary of the task selection process of study design

Summary of the task selection process of study design

The steps required to complete the task selection phase are as follows:

- Determine if real cases will be used for the test, or if test case scenarios will be used instead.

- If real cases will be used, then:

- There is no need for the usability test designers to design any test cases, as the test subjects will be working with the tested system naturally (with real cases).

- If test cases will be used, then:

- Gather system user task information by:

- Drawing from medical educational test cases

- Drawing from real health data with the aid of a medical expert

- Drawing from workflow analysis studies

- Gather system user task information by:

- If real cases will be used, then:

Selection and creation of the evaluation environment

Selection and creation of the evaluation environment

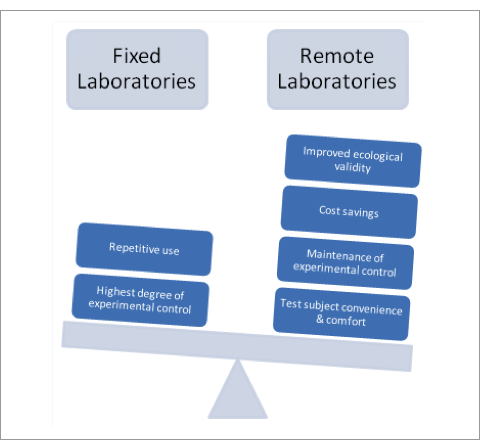

Where should the testing environment be located?As is the case with the generation of experimental tasks, the need to create an artificial evaluation environment is dependent on the range of experimental control chosen in the study design phase. For naturalistic studies, the end system's natural environment (the workplace) should be used for the study; whereas a more controlled study will tend to use more artificial environments, such as company testing facilities. For naturalistic studies, the use of the end system's workplace environment enhances the probability of encountering the same usability problems that would occur once the system is released, which increases the tests ecological validity. Using a naturalistic approach to usability studies, however, is often times unfeasible due to concerns about maintaining the safety and privacy of the subjects under study (i.e. vulnerable patients). For this reason, laboratory based test environments are often times necessary to use for usability studies. There are two types of laboratory setups for usability studies, fixed laboratories and remote laboratories. The following two paragraphs define the two laboratory designs, and provide the use justification for each approach.

Fixed Laboratories:Traditionally, fixed laboratories (static rooms that are reserved and designed for testing purposes) have been used to maintain experimental controls for system tests. Such stationary laboratories normally consist of a testing area, where the test subjects are asked to use the systems under study, and an observation area, where the experimenters watch their subjects. Fixed laboratories are often equipped with ceiling mounted audio/video equipment, as well as one-way mirrors to aid with the observational recordings. Because fixed laboratories are owned and operated by the testing organization, they are the most effective environment used for controlling the testing conditions (since anything that goes in or out of the laboratory can be controlled by the experimenters). They can also be convenient when there is a need to evaluate multiple systems (since minimal setup between each evaluation is required). The downside to fixed laboratories, however, is that they can be quite costly to maintain (as a physical space must be secured over the duration of the study), and their artificial nature makes it difficult to create representative testing environments, which lowers a test's ecological validity.

Remote Laboratories:The second approach to creating laboratory testing environments for usability testing studies is the remote laboratory approach. Remote laboratories exist within the end system's natural environment, whilst concurrently maintaining the safety and privacy of the test subjects through the use of experimental controls. Using the tested system's natural setting greatly improves the usability test's ecological validity, for the more representative the test is of the actual work setting, the more likely it is that the test will capture the usability problems that are likely to occur in the field. It is also more convenient for the test subjects to have the testing performed in their workplace, as opposed to an unfamiliar testing lab in a remote location. The costs of creating a remote laboratory can also be advantageous over using a fixed lab. Remote laboratories can be setup through the utilization of today's low-cost, portable technology, and because there is no need to secure a separate testing facility for such tests, the costs associated with conducting remote usability test are relatively low.

Recommended Approach:

Figure 8: Fixed Laboratories vs. Remote Laboratories

Increases in: ecological validity, test subject convenience, and cost savings, coupled with the maintenance of experimental control, make the remote laboratory environmental approach to usability testing design the recommended method. There are, however, some scenarios where a fixed laboratory could be necessary to use, or preferred over a remote laboratory approach. These scenarios include:

- When it is not possible to conduct the study within the system's natural environment (i.e. the natural setting is too busy/crowded to include additional evaluators).

- When the evaluation setting requires the highest degree of experimental control (i.e. if the study calls for the removal of all environmental variables).

- If the test environment is setup to evaluate multiple systems from various work settings (i.e. a company specializing in usability testing may have their own, fixed laboratory, to test several systems from differing environments without having to continually re-setup their testing lab in those settings).

Over the past several years, the equipment required for conducting usability studies has become more and more portable, powerful, and cost effective. This has made the 'Portable Discount Usability' (Kushniruk & Patel, 2004) approach far more feasible to conduct.

For a complete list of all equipment and software required to setup a remote usability lab, see table 2:

Table 2: Required equipment (hardware and software) for conducting a usability test

Table 2: Required equipment (hardware and software) for conducting a usability test

| Equipment | Purpose | Recommendations | Examples | Cost Range |

| Computer (desktop or laptop | Runs the system under study and/or the screen capture and video analysis software |

|

See the following computer/electronics store Web sites for examples:

|

$400 - $2000 |

| Computer microphone | Captures the 'Think Aloud' recordings. |

|

See the above computer/electronics store Web sites for examples. | $5 - $100 |

| Portable digital video camera | OPTIONAL (for studies that require physical reaction recordings) - Only needed when the study requires analysis of the test subjects' physical movements. | Any standard digital video camera that can output computer readable files (i.e. .avi, .mpg, etc.) will suffice. | See the above computer/electronics store Web sites for examples. | $0 - $400 |

| Portable computing device | OPTIONAL (for portable system tests only) - Runs the system under study | The portable computing device that naturally runs the system under study should be used | See the above computer/electronics store Web sites for examples | $0 - $2000 |

| Link Cable | OPTIONAL (for portable system tests only) - Routs the video output from the portable device that is running the system, to the computer so that it can be screen captured. | Typically, this cable will be supplied with the portable computing device (i.e. a USB 2.0 cable). | See the above computer/electronics store Web sites for examples. | $0 - $30 |

| Screen capture audio/video recording application | Records the test subjects' interactions with the interface of the system under study. |

|

|

$0 - $8000 |

| Portable computing device video routing application | OPTIONAL (for portable system tests only) - Routs the video output from the portable device that is running the tested system to the computer that is running the screen capture application | Any application that will stream the live video from the portable computing device as it is being operated on by a user will suffice. |

|

$20 - $30 |

| Total | $425 - $12,600 | |||

How can the testing environment be setup?

How can the testing environment be setup?

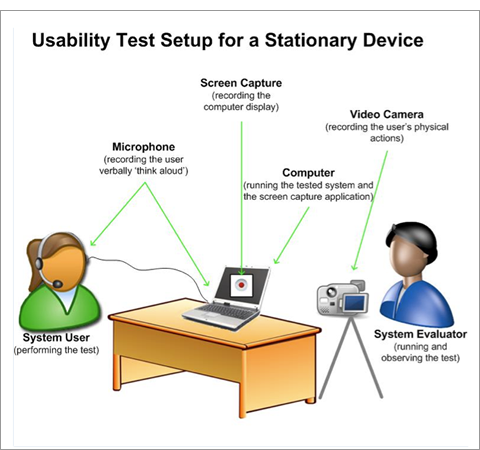

Setup for a stationary systemThe general setup of the usability testing environment is the same regardless of whether or not a fixed laboratory or a remote laboratory setup is used. For a computer-based system, there are four essential components needed for collecting data in a usability test: a computer with a monitor (which can either be a desktop or a laptop), which is used to run the tested system; a microphone, which is used to record the test subjects as they 'Think Aloud' (described in the next section); a video screen capture application, which is used to record the system's display on the monitor as the test subjects interact with the system; and (optionally) a video camera, which is used to record the users' physical actions as they perform the tests. An additional component, video analysis software, is also highly recommended for use in the analysis phase of usability tests.

During the test, the tested system will run through the computer and be presented on its monitor. As the test is being conducted, the video screen capture application will record all of the events which are displayed on the monitor, and save them as a video file. The video screen capture application should also utilize the microphone (which is normally a headset worn by the test subject) by recording all of the subject's auditory comments during the test on the screen capture video file. Finally, the system users' physical actions (i.e. facial expressions, body language, etc.) can be recorded via portable digital video cameras or Web cameras (although, in many studies, this extra layer of data capture may not be necessary, as the screen capture recordings can often times suffice in providing the necessary system use data). Such physical action recording would be necessary in studies that are designed to capture both the subjects' interactions with an electronic system as well as their interactions with other physical objects such as their paper notes). The physical action recordings could also be used to provide an additional layer of user experience data (i.e. a user shown to be smiling during a system test is more likely to be satisfied with a system than a user who is scowling), or process tracking (i.e. by tracking the eye movements with special eye tracking software). In a medical setting, there are occasions when the video cameras need to be placed in unobtrusive locations (as opposed to a tripod-mounted video camera). Possible solutions to such cases include: using ceiling mounted cameras, using compact cameras in hidden locations, or often is the case in a hospital setting where there are already rooms that are readily equipped with such unobtrusive monitoring devices (i.e. student interview rooms), which can be utilized for such testing purposes.

The following diagram depicts the usability test setup for a stationary system:

Figure 9: Usability Test Setup for a Stationary Device

Setup for a portable system

Setup for a portable system

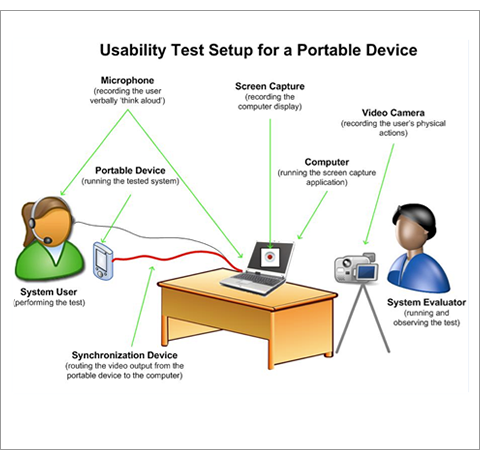

The basic setup for testing a portable computing system (i.e. smartphones, PDAs, etc.) is the same as a stationary computing system, except that an additional device and/or application is needed to synchronize the portable system to the laptop/desktop's monitor. Currently, a lack in memory size, processor speed, and available screen capture software make recording the screen interactions on a portable device unfeasible; however, it is possible to rout the portable device's video output to a desktop/laptop computer, where that video could then be recorded as normal. In such a setup, while the test subject interacts with the portable system, those interactions must be synchronously displayed on the desktop/laptop monitor (via the synchronization device/application). When the portable device's display has been routed to the desktop/laptop's monitor, then the desktop/laptop's video screen capture application and microphone can be used to capture the screen and voice events (as in the previous setup).

The following diagram depicts the usability test setup for a portable system:

Figure 10: Usability Test Setup for a Portable Device

Environmental setup summary

Environmental setup summary

When selecting and setting up the usability test environment, consider the following:

- Whether or not to use a remote or fixed laboratory.

- a. Remote laboratories can be cost effective and they increase ecological validity; however, fixed laboratories are the best environments for maintaining experimental controls.

- What hardware is needed?

- a. The following equipment needs to be secured before running a usability test:

- i. a computer or a laptop (to run the system under study and/or the screen capture and video analysis software)

- ii. a computer microphone (required to capture the 'Think Aloud' recordings)

- iii. optional - digital video cameras (to capture the test subjects' physical movements)

- iv. optional - a portable computing device (to run the system under study, if and only if the system is naturally ran on a portable device)

- v. optional - a portable-device-to-computer link cable (to rout the video output from the portable device, that is running the system, to the computer so that it can be screen captured)

- a. The following equipment needs to be secured before running a usability test:

- 3. What software is needed?

- a. The following applications need to be secured before running a usability test:

- i. A screen video capture application (to record the test subjects interactions with the interface of the system under study)

- ii. A video analysis application to aid with transcribing, annotating, and analyzing the video data

- iii. optional - an application to rout the video output from the portable device that is running the tested system to the computer that is running the screen capture application.

- a. The following applications need to be secured before running a usability test:

- How will the test be setup?

- a. The test can be setup according to the schematics shown in figure 9 (for a stationary system), or figure 10 (for a portable system).

Selection of the study metrics (coding scheme)

Selection of the study metrics (coding scheme)

Generating a coding schemeFor usability studies, a coding scheme is a complete collection of the names (codes) and definitions of the metrics used to measure the usability of a system. The coding scheme serves as the reference manual for researchers as they annotate the audio/video data from experimental sessions. Using this manual, whenever events of interest occur in the recordings, the evaluators mark the events according to their set of standardized identification labels from the coding scheme. A coding scheme should include only those categories that are relevant to the hypothesis under study, and should not include any extraneous data points. To accomplish this, it is imperative that a coding scheme is developed a priori (before the collected data is analyzed), so that the study's resulting hypothesis is not data driven (Todd & Benbasat, 1987). The codes within the scheme can be used to identify: usability problems, content problems, as well as any user-entered system errors that occurred during the data collection phase of the study.

There are two basic types of codes: descriptive codes and interpretive codes. Descriptive codes capture objective events that were observed by the evaluators (i.e. the code 'System Speed', is marked when the user states "this system is running too slow for my liking"); whereas, interpretive codes are used to capture the evaluators' assumptions about the mental state of the test subjects (i.e. the code 'Attention' is marked when the evaluator believes that the user seems distracted during a particular part of the test).

Codes can also be divided by severity. For example, one study may require that all user errors are recorded under the single code 'user error', while another study may require that all user errors are classified according to a severity ranking (i.e. for a prescription order entry system: a spelling mistake in the patient's street address could be labelled as a 'minor error', due to its minimal significance on the safety of the patient; a 'medium error' could be marked if the physician could not find the needed medication in the system, and a 'critical error' could be if the physician accidentally prescribed a potentially harmful dose of medication to a patient). The coding scheme could also capture whether or not the error was caught by the system user (i.e. a prescription error that was caught and corrected by a physician could be a 'slip', whereas an error that was not caught by the physician could be labelled as a 'mistake') (Kushniruk, Triola, Borycki, Stein, & Kannry, 2005).

When deciding upon the coding scheme to use for a particular study, the evaluator can either: create an entirely new coding scheme, use a ready-made coding scheme, or revise a ready-made coding scheme to suit the needs of their unique study. There are several models that have been developed to help guide the creation of coding schemes. Such models as the 'Task Action Grammar' (TAG) from (Payne, 1986), 'User Concerns' from (Rosson & Carroll, 1995), and most notably 'Goals, Operations, Methods, and Selection Rules (GOMS) from (Card, Moran, & Newell, 1983) have all been well documented and used in academia; however, their practical use in industry has been quite limited due to issues with the complexity of the models (introductory researchers find the models too difficult to quickly adopt) and their general feasibility (the models can be time consuming to apply) (Hofmann, Hummer, & Blanchani, 2003). It is also generally believed that there are too many variations between different system evaluations to have a completely generic, domain-independent coding scheme. This lack of a 'one-size-fits-all' coding scheme for usability studies generally drives evaluators to take the 'minimalist approach' to generating coding schemes, where a ready-made coding scheme is tailored to meet the hypothesis-driven needs of their unique studies (Hofmann, Hummer, & Blanchani, 2003).

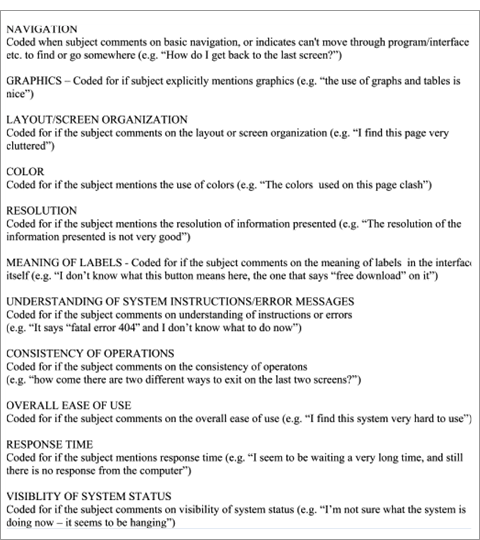

One coding scheme that has been applied in a number of usability studies for health information technology systems includes the following classical human computer interface (HCI) coding categories from Kushniruk & Patel, 2004:

- "information content (i.e., whether the information system provides too much information, too little, etc.)

- comprehensiveness of graphics and text (i.e., whether a computer display is understandable to the subject or not)

- problems in navigation (i.e., does the subject have difficulty in finding desired information or computer screen?)

- overall system understandability (i.e., understandability of icons, required computer operations and system messages)".

Codes could also be created to capture higher level cognitive processes, such as the generation of a diagnostic hypothesis by a physician during the use of a CPR system.

Coding scheme for analyzing human - computer interaction

Coding scheme for analyzing human - computer interaction

Also from (Kushniruk & Patel, 2004), is an example of a coding scheme that is used to assess the ease of use of a computer system (see figure 11). For each code in the scheme, the code's definition is provided (which provides a description of when the code should be used by the evaluators during the annotation process), as well as a sample user statements from past studies in which this coding scheme was applied. This sample scheme was developed through the analysis of previously used coding schemes defined in past HCI and cognitive literatures (Kushniruk A. , Analysis of complex decision making processes in health care: cognitive approaches to health informatics, 2001), (Kushniruk & Patel, 1995) (Shneiderman, 2003)

Figure 11: An excerpt from a coding scheme for analyzing human - computer interaction - from (Kushniruk & Patel, 2004)

Further examples of coding schemes

Further examples of coding schemes

Refining and validating the coding schemeOnce the first draft of the hypothesis-driven coding scheme has been established, the usability system evaluators need to validate the coding scheme. Before observing any user test recordings, it can often times be difficult for evaluators to know exactly which data points can and should be measured from the material to validate a hypothesis. Due to this uncertainty, it may be necessary to refine the initially developed coding scheme, either by adding or removing codes. To accomplish this, after the user data has been collected in the DO stage of the Usability Test, before the evaluators transcribe and annotate all of the test data in the STUDY stage in the Usability Test design, they should first individually code a sample test data set (i.e. the first user’s audio/video test recordings), whilst taking notes on the applicability of their coding scheme with regards to capturing the data needed to test their hypothesis. The evaluators then need to compare their coding scheme refinement suggestions, and come to an agreement on the most appropriate changes to make to the coding scheme (if any). This validation process should be then repeated if any changes were made to the coding scheme.

Study metric selection summary- Select, revise, or create a coding scheme to annotate the usability problems and user errors found in the collected data.

- Validate the appropriateness of the coding scheme (the coding scheme should only include codes that can be used to validate the hypothesis).

Do

Data collection

Data collection

In the data collection phase of usability testing, test subjects are recorded (with both audio and video recording devices) performing tasks with the system under evaluation. Due to myriad of test design variables (i.e. what the purpose of the evaluation is, which representative tasks to use, where will the study be performed, etc.), there are countless possibilities with how a usability test can unfold. The test subject may need to follow an instruction set of specific tasks to carry out (i.e. “Create a new patient record whilst ‘Thinking Aloud’ or verbalizing your thoughts”), or the test subject may simply use their system in a natural context (i.e. conduct a real interview with a simulated patient, whilst ‘Thinking Aloud’) (Kushniruk, Kaufman, Patel, Levesque, & Lottin, 1996). Sometimes during the data collection phase, it may also be necessary to probe the test subjects with questions about their use or experience with the system under study (i.e. “What do you think about the addition of the new prescription feature of the system?”). This can be performed to retrieve additional information about areas of the system of particular concern, or as a means of promoting open dialog with the test subject.

Regardless of the design, task, and environmental differences, the same general method applies to collecting the user data during a usability test. Initially, before the test starts, the users should receive a brief training session to introduce them to the usability testing techniques that will be used in the study (i.e. ‘Think Aloud’), as well as introduce them to the new system, and how to use it (for new systems). After the system users understand the testing techniques and the system, the system evaluator records the users’ actions as they perform system tasks. These tasks will either be generated from the users’ natural use of the system, or from a task list that is provided to them by the system evaluators. The recorded user’s actions will include: a video recording of the system’s output device (i.e. a computer monitor) via a screen capture application, an audio recording of the user ‘Thinking Aloud’ (described below) via a computer microphone, and may also include video recordings of the user’s physical actions via the use of a separate video camera (for recording general physical actions) and/or a Web camera (for recording facial expressions).

As the selected test subjects perform their system tasks, they are asked to ‘Think Aloud’. Thinking aloud is a cognitive science technique where subjects are recorded and asked to vocalize their thoughts as they interact with a system. By having the users ‘Think Aloud’, they reveal their feelings about their user experience, whilst such thoughts are still in short-term memory. Often times due to constraints with long-term memory, when users are asked to reflect on their user experience (as opposed to reporting it as it’s happening), instead of providing rich, detailed information about specific aspects of the systems, their comments become more generalized and/or distorted. Because a person’s cognitive processes travel through short term memory, the ‘Think Aloud’ technique can be used to capture the user experiences as they happen, increasing level of detail and quality of the captured data drastically (Ericsson & Simon, Protocol analysis: Verbal reports as data (Revised edition)., 1993).

Occasionally a set of tasks may be too difficult or too fast-paced for a user to ‘Think Aloud’ as they are performed (i.e. when performing surgery on a real-time patient simulator), or if the study design is naturalistic (i.e. an actual patient doctor meeting), it may not be appropriate or safe for the test subject to ‘Think Aloud’ as the user tasks are being performed. In such cases, the cued recall technique can be used to help test subjects retrospectively comment on their tasks. Cued recall utilizes the video recordings of the system test to help the test subject remember their thought process as they were performing their tasks. This is achieved by playing back the audio/video recordings to the user directly after the tested event, and having the user ‘Think Aloud’ as he/she is watching the recording. To help the user recall all of the events, it may also be helpful to play the recording multiple times (especially during the critical sections), or for the evaluator to ask the test subject questions about their thoughts and feelings during particular points in the recording. The downside to cued recall is that although it greatly helps the test subjects remember their thoughts and feelings from the test, it is not a replacement to the test subjects freely thinking aloud during a test (as they may still forget some of their thoughts or they may over think their comments). For this reason, cued recall should only be used if ‘Think Aloud’ is unfeasible.

Subject background questionnaires

Subject background questionnaires

Questionnaires can be used to gather background information both before and/or after a subject has been tested using a system. For pre-system-test use, questionnaires can be used to gather information to better understand the users, such as their domain or technical experience (Kushniruk A. , Patel, Patel, & Cimino, 2001). This can be used to fit the users into their respective user profile stereotypes, which were created in the second phase of the RR design. Post-system-test questionnaires could be used to assess changes in the users’ workplace behaviour, system or domain knowledge, and/or their opinions about the use of the tested system. For example, when testing an educational system, it may be necessary to provide a questionnaire/test to the system users both before and after they use the system thus allowing the evaluators to assess any knowledge growth by the test subjects after using the system (how many questions were answered correctly before the subjects used the system vs. how many questions were answered correctly after the subjects used the educational system).

The questionnaire can also be integrated with an introduction to the study for the test subjects (if it is given to the subjects before the test). Many of the methodologies used in usability testing, such as ‘Think Aloud’ (described below) may be new to the test subjects, so providing them with contextual information about the study (i.e. how the study will be performed and why this particular study design was chosen) may prove to be comforting to the subjects. For more information about questionnaires and how to create them, please see Questionnaires under the DO section of Phase 2 - Know the Users .

For examples of background questionnaires, please see the Studt tab.

Study

Data Compilation

Data Compilation

The goal of the analysis phase of usability testing is to manipulate previous phase’s rich audio/video data into information that will help the system designers improve the usability of their product. Approaches used for analyzing audio/video data range from informal reviews of the material (i.e. paper notes about casual observations of system/user behaviour) to formalized methodologies. The recommended approach for analyzing usability testing data is grounded in protocol analysis, a framework formalized in (Newell & Simon, 1972). Protocol analysis is a set of methodologies used for collecting and analyzing (traditionally) verbal data. In 1983, Bauman outlined four degrees of protocol analysis depth (with each subsequent level increasing in complexity, and thus requiring substantially more effort to complete):

- Scanning - informally examining the protocol data through observation

- Scoring - categorizing and tabulating the frequencies of occurrence for items of interest

- Global Modeling - developing flowcharts and algorithms to represent the test subjects’ cognitive processes

- Computer Simulations - creating an executable computer simulation to reproduce the test subjects’ cognitive processes

The recommended approach for usability testing is derived from the ‘Scoring’ level of protocol analysis. Using the ‘Scoring’ approach to protocol analysis, the analysis of usability testing data takes place over three stages:

- Creating a transcript

- Annotating the transcript

- Verifying the annotated transcript

The following sections contain descriptions about how to complete each of these three analysis steps.

Stage 1: Creating a transcript

Stage 1: Creating a transcript

During the transcription stage, the evaluators must create a word-for-word transcription report for all of the audio/video test recordings. This transcription report serves as the foundation document for the system analysis annotations. Every event in the system test must be logged and time-stamped (as it occurs in the audio/video recordings), so that the evaluators can later use the file to codify from the previously created schema (Kushniruk A. W., Patel, Cimino, & Barrows, Cognitive evaluation of the user interface and vocabulary of an outpatient information system, 1996), (Kushniruk, Patel, & Cimino, Usability testing in medical informatics: cognitive approaches to evaluation of information systems and user interfaces, 1997). Not only should the users’ ‘Thinking Aloud’ audio be transcribed during this stage, but any physical events of interest (i.e.” the user looks up at the ceiling and scratches his head”) should be transcribed and time-stamped as well. Although specialized computer applications are not required for transcribing (and later annotating) the audio/video recordings, as this can be accomplished with hand-written notes that provide reference to the recording time, it is highly recommended that such applications are utilized, for they can greatly increase the efficiency of conducting the analysis. Such programs are readily available to download over the Web at little or no cost (see table 2).

The recommended audio/video analysis software (found in table 2) provides such features as:

- Automated linkage between transcribed text and audio/video recording (i.e. the evaluator can click on a quote of interest on the transcript, and have that particular audio/video section instantly play

- Automated indexing of transcribed material (i.e. the events in the audio/video transcript could be sorted according to time of occurrence, coded annotation, etc.)

- Improved facilitation of inter-rater reliability (i.e. the applications can allow for multiple users to individually code the same recording, which could then be cross-compared)

- Pattern detection of codes in the usability data (i.e. the evaluator could quickly search for the proximity of ‘System Speed’ problem occurrences to ‘User Attention’ occurrences)

Stage 2: Annotating the transcript

Stage 2: Annotating the transcript

During the annotation stage, the evaluators individually review all of the audio/video test data, and mark up the transcript (created in the previous stage) with annotations from the coding scheme. To help with verifying these annotations (see stage 3), there should be a minimum of two evaluators annotating each user test transcript. Each usability event annotation should include: a time-stamp, which states exactly when the event occurred in the media (i.e. screen-capture video, 00h:03m:44s - hours : minutes : seconds), the code (i.e. “COLOR”), and a brief description of the event (i.e. “the user stated that the contrast isn’t very good in the main menu bar”).

The coding scheme should clearly define, to all system evaluators, exactly what constitutes a usability event that requires annotation. For instance, the coding scheme may define the code “Navigation” as “an issue resulting in the user having difficulty locating a system location or component“. The evaluators can then follow this standardized, study-wide definition for a “Navigation” usability event, and annotate their individual copies of the transcript with the code “Navigation”, along with a time stamp, and a short description of the event, every time they see or hear an issue that fits with this definition.

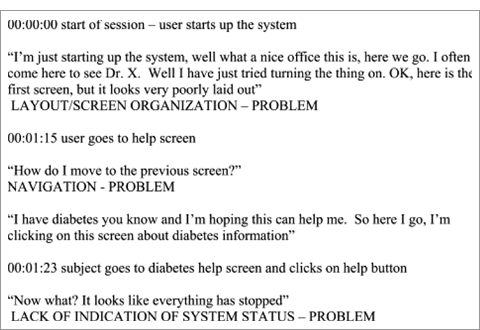

An example of an annotated transcript that utilizes the coding categories from figure 11 is shown in figure 12. This annotated transcript includes: a subject’s ‘Thinking Aloud’ report (which is marked with annotations from the coding scheme), time-stamped event descriptions (the time stamp refers to the corresponding section of the recording when the event occurred), as well as an event classification marking for problematic usability issues (marked as “PROBLEM”).

Figure 12: A coded usability test transcript excerpt from a Web-based information resource study - from (Kushniruk & Patel, 2004)

The end result of this stage will be a set of annotated transcripts from each evaluator. These individual annotated transcripts will then be compiled in the next stage.

Stage 3: Verifying the annotated transcript

Stage 3: Verifying the annotated transcript

It has been argued that because protocol analysis deals with the processing of natural language, that the coding of such studies can be non-reproducible and subjective (Simon, 1979). To assure that this is not the case, inter-coder agreement by a minimum of two evaluators must be established for all transcript annotations (Todd & Benbasat, 1987), (Vessey, 1984), (Payne, Braunstein, & Caroll, 1978). To accomplish this, the evaluators need come together to analyze and discuss the differences in their annotated transcripts. All differences need to be resolved either through discussion, or re-reviewing the usability events in question. Once all differences are resolved, only a single, compiled, annotated set of usability test transcripts will exist for all evaluators. This super-set of annotated transcripts can now be used to interpret the usability findings.

Data Compilation Summary

The following is a summary of the steps required to analyze usability testing data:

- Create a transcript from all of the audio/video test data (for the benefit of the system evaluators, this step should be conducted with the aid of a video transcription/annotation application).

- Two or more system evaluators should independently annotate the usability events found in the transcribed test data in accordance with the coding scheme.

- All system evaluators should acquire inter-coder agreement to create a single, unified, coded document. This is accomplished through collaborative discussion and review of the individually coded material.

Analysis and Interpretation

Analysis and Interpretation

The annotated transcript created in the previous phase can be a rich source of qualitative data. This qualitative data can also be turned into quantitative data by tabulating the frequencies of the coded annotations (Babour, 1998), (Borycki & Kushniruk, 2005). With this quantitative data, inferential statistics can be applied to generate predictions for future usability events (Patel, Kushniruk, Yang, & Yale, 2000).

Because usability tests can be used to test a wide breadth of issues, any number of issues may need to be investigated during the analysis step (including, but not limited to task accuracy, user preference data, time to completion of task, frequency and classes of problems encountered, or even the relationships that such usability aspects have on each other) (Kushniruk & Patel, 2004). These results are typically formatted as summary tables, which indicate the study findings (i.e. the frequency and type of user problems that occurred whilst testing a system).

Analysis and Interpretation Summary

The following are the key points of interest from the interpretation of findings phase:

- Usability testing data can be presented both qualitatively and quantitatively.

- Inferential statistics can be applied to the quantitative usability data to generate predictions about usability events.

- Usability testing results can be applicable to several areas of system use, including but not limited to: task accuracy, user preference data, time to completion of task, frequency and classes of problems encountered, or even the relationships that such usability aspects have on each other (Kushniruk & Patel, 2004).

- sability results are often formatted as summary tables of statistics.

Act

Iterative input into design

The information gathered from the rapid response evaluation should be passed on to the system designers, who could then use this information to improve the design of their system. If any changes are then made to the system by its designers, the testing process should be repeated to determine if any problems were created by the system changes. This can be seen as a means of incorporating continual improvement into the developmental lifecycle of information systems.

PDSA Summary

Plan

- Select a sample

- Choose the number of test subjects

- i. A testing group of 8-10 subjects can capture 80% of a system’s usability issues; however, 15-20 subjects are needed to conduct inferential statistics.

- Select the test subjects

- i. The selected test subjects should be representative of the end-system user population (as defined in the user profile set).

- ii. There should be at least one representative user from each user profile, and proportionally more users from the more prominent user groups.

- Create the study sample groups

- i. Within-group or between-group study designs can be chosen (the choice is dependent on the objective of the study.

- Choose the number of test subjects

- Select a task

- Determine if real cases will be used for the test, or if test case scenarios will be used instead.

- i. If real cases will be used, then:

- 1. There is no need for the usability test designers to design any test cases, as the test subjects will be working with the tested system naturally (with real cases).

- ii. If test cases will be used, then:

- 1. Gather system user task information by:

- a. Drawing from medical educational test cases

- b. Drawing from real health data with the aid of a medical expert

- c. Drawing from workflow analysis studies

- 1. Gather system user task information by:

- i. If real cases will be used, then:

- Determine if real cases will be used for the test, or if test case scenarios will be used instead.

- Select the test environment

- Whether or not to use a remote or fixed laboratory

- i. Remote laboratories can be cost effective and they increase ecological validity; however, fixed laboratories are the best environments for maintaining experimental controls.

- What hardware is needed?

- i. The following equipment needs to be secured before running a usability test:

- a computer or a laptop (to run the system under study and/or the screen capture and video analysis software)

- a computer microphone (required to capture the ‘Think Aloud’ recordings)

- optional - digital video cameras (to capture the test subjects’ physical movements)

- optional - a portable computing device (to run the system under study, if and only if the system is naturally ran on a portable device)

- optional - a portable-device-to-computer link cable (to rout the video output from the portable device, that is running the system, to the computer so that it can be screen captured)

- i. The following equipment needs to be secured before running a usability test:

- What software is needed?

- i. The following applications need to be secured before running a usability test:

- A screen video capture application (to record the test subjects interactions with the interface of the system under study)

- A video analysis application to aid with transcribing, annotating, and analyzing the video data

- optional - an application to rout the video output from the portable device that is running the tested system to the computer that is running the screen capture application.

- i. The following applications need to be secured before running a usability test:

- How will the test be setup?

- i. The test can be setup according to the schematics shown in figure 9 (for a stationary system), or figure 10 (for a portable system).

- Select the study metrics

- Select, revise, or create a coding scheme to annotate the usability problems and user errors found in the collected data.

- Validate the appropriateness of the coding scheme (the coding scheme should only include codes that can be used to validate the hypothesis).

- Whether or not to use a remote or fixed laboratory

Do

The test subjects should be provided with a brief, standardized system training session in order to provide them with an overview of the system and its use, and to provide them with an introduction to the usability testing techniques that will be used during the evaluation.

If the test design is a simulation, provide the test subjects with a list of tasks to complete for the evaluation (i.e. through a sample scenario).

Record the test subjects’ actions as they use the system. Recordings should be made of the system’s screen (through screen capture software), the users’ voices, as they ‘Think Aloud’ (through a microphone), and the users’ physical actions (through a digital video camera).

(Optional) - Provide questionnaires to the test subjects to gather additional feedback about their system test and their background.

Study

Compile the test result data

- Create a transcript from all of the audio/video test data (for the benefit of the system evaluators, this step should be conducted with the aid of a video transcription/annotation application).

- Two or more system evaluators should independently annotate the usability events found in the transcribed test data in accordance with the coding scheme.

- All system evaluators should acquire inter-coder agreement to create a single, unified, coded document. This is accomplished through collaborative discussion and review of the individually coded material.

Analyze and interpret the compiled results

- Usability testing data can be presented both qualitatively and quantitatively.

- Inferential statistics can be applied to the quantitative usability data to generate predictions about usability events.

- Usability testing results can be applicable to several areas of system use, including but not limited to: task accuracy, user preference data, time to completion of task, frequency and classes of problems encountered, or even the relationships that such usability aspects have on each other (Kushniruk & Patel, 2004).

- Usability results are often formatted as summary tables of statistics.

Act

Usability testing results should be given to the system designers so that usability improving revisions can be made to their systems.

System improvement via usability testing is an iterative process. Each time a system undergoes changes, it should be re-tested for usability issues.

References

References

- Babour, R. (1998). The Case of Combining Quantitative Approaches in Health Services Research. Journal of Health Services Research Policy , 4 (1), 39-43.

- Borycki, E., & Kushniruk, A. (2005). Identifying and Preventing Technology-Induced Error Using Simulations: Application of Usability Engineering Techniques. Healthcare Quarterly , 8, 99-105.

- Bouwman, M. J. (1983). The Use of Protocol Analysis in Accounting Research. Unpublished (working paper) .

- Card, S. K., Moran, T. P., & Newell, A. (1983). The Psychology of Human-Computer Interaction. Hillsdale: Lawrence Erlbaum Associates.

- Ericsson, K. A., & Simon, H. A. (1993). Protocol analysis: Verbal reports as data (Revised edition). Cambridge: MIT Press.

- Hofmann, B., Hummer, M., & Blanchani, P. (2003). State of the Art: Approaches to Behaviour Coding in Usability Laboratories in German-Speaking Countries. In M. Smith, & C. Stephanidis, Human - Computer Interaction - Theory and Practice (pp. 479-483). Sankt Augustin: Lawrence Erlbaum Associates.

- Kushniruk, A. (2001). Analysis of complex decision making processes in health care: cognitive approaches to health informatics. Journal of Biomedical Informatics (34), 365-376.

- Kushniruk, A. W., & Patel, V. L. (2004). Cognitive and usability engineering methods for the evaluation of clinical information systems. Journal of Biomedical Informatics (37), 56-76.

- Kushniruk, A. W., Kaufman, D. R., Patel, V. L., Levesque, Y., & Lottin, P. (1996). Assessment of a Computerized Patient Record System: A Cognitive Approach to Evaluating Medical Technology. M. D. Computing , 13 (5), 406-415.

- Kushniruk, A. W., Patel, V. L., & Cimino, J. J. (1997). Usability testing in medical informatics: cognitive approaches to evaluation of information systems and user interfaces. Proc AMIA Annu Fall Symp , 218-222.

- Kushniruk, A. W., Patel, V. L., Cimino, J. J., & Barrows, R. A. (1996). Cognitive evaluation of the user interface and vocabulary of an outpatient information system. Proc AMIA Annu Fall Symp , 22-26.

- Kushniruk, A., & Patel, V. (1995). Cognitive computer-based video analysis: its application in assessing the usability of medical systems. Medinfo , 1566-1569.

- Kushniruk, A., Patel, C., Patel, V. L., & Cimino, J. J. (2001). ''Televaluation'' of clinical information systems: an integrative approach to assessing Web-based systems. International Journal of Medical Informatics , 61 (1), 45-70.

- Kushniruk, A., Triola, M., Borycki, E., Stein, B., & Kannry, J. (2005). Technology induced error and usability: The relationship between usability problems and prescription errors when using a handheld application. International Journal of Medical Informatics (74), 519-526.

- Newell, A., & Simon, H. A. (1972). Human Problem Solving. Englewood Cliffs, New Jersey: Prentice-Hal.

- Nielsen, J. (1993). Usability engineering. New York: Academic Press.

- Patel, V. L., Kushniruk, A. W., Yang, S., & Yale, J. F. (2000). Impact of a Computer-Based Patient Record System on Data Collection, Knowledge Organization and Reasoning. Journal of the American Medical Informatics Association , 7 (6), 569-585.

- Payne, J. W., Braunstein, M. L., & Caroll, J. S. (1978). Exploring Predecisional Behavior: An Alternative Approach to Decision Research. Organizational Behavior and Human Performance , 22 (1), 17-44.

- Payne, S. J. (1986). Task-Action Grammars: A Model of the Mental Representation of Task Languages. In S. J. Payne, & T. R. Green, Human-computer interaction (Vol. 2, pp. 93-133). Lawrence Erlbaum Associates.

- Rosson, M. B., & Carroll, J. M. (1995). Narrowing the specification-implementation gap in scenario-based design. In J. M. Carroll, Scenario-based design: envisioning work and technology in system development (pp. 247-278). New York: John Wiley & Sons, Inc.

- Rubin, J. (1994). Handbook of usability testing: how to plan, design and conduct effective tests. New Yrok: Wiley.

- Shneiderman, B. (2003). Designing the user interface (4th Edition ed.). New York: Addison-Wesley.

- Simon, H. A. (1979). INFORMATION PROCESSING MODELS OF COGNITION. Annual Review of Psychology , 311-396.

- Todd, P., & Benbasat, I. (1987). Process Tracing Methods in Decision Support Systems Research: Exploring the Black Box. MIS Quarterly , 11 (2), 493-512.

- Vessey, I. (1984). An investigation of the Psychological Processes Underlying the Debugging of Computer Programs. unpublished Ph.D. thesis University of Queensland.

News and events

- Publications, presentations, and projects sections of website updated

- Paper published in International Journal of Health Information Management Research (2014)

- Paper published in BMC Medical Informatics and Decision Making (2014)

- Presented at Queen’s Health Policy Change Conference Series (May 15-16, 2014)

- Presented at CAHSPR 2014 Conference (May 12-14, 2014)

- Paper published in Healthcare Quarterly (2014)

- Paper published in Journal of American Medical Informatics Association (2014)